Event-Driven Architecture with Serverless Functions – Part 1

Contents

Contents

This is the 1st part of the series “A new type of stream processing.”

In this series of articles, we are going to explain what is the missing piece in stream processing, and in this part, we’ll start from the source. We’ll break down the different components and walk through how they can be used in tandem to drive modern software.

First things first, Event-driven architecture. EDA and serverless functions are two powerful software patterns and concepts that have become popular in recent years with the rise of cloud-native computing. While one is more of an architecture pattern and the other a deployment or implementation detail, when combined, they provide a scalable and efficient solution for modern applications.

What is Event-Driven Architecture

EDA is a software architecture pattern that utilizes events to decouple various components of an application. In this context, an event is defined as a change in state. For example, for an e-commerce application, an event could be a customer clicking on a listing, adding that item to their shopping cart, or submitting their credit card information to buy. Events also encompass non-user-initiated state changes, such as scheduled jobs or notifications from a monitoring system.

The primary goal of EDA is to create loosely coupled components or microservices that can communicate by producing and consuming events between one another in an asynchronous way. This way, different components of the system can scale up or down independently for availability and resilience. Also, this decoupling allows development teams to add or release new features more quickly and safely as long as its interface remains compatible.

The Usual Components

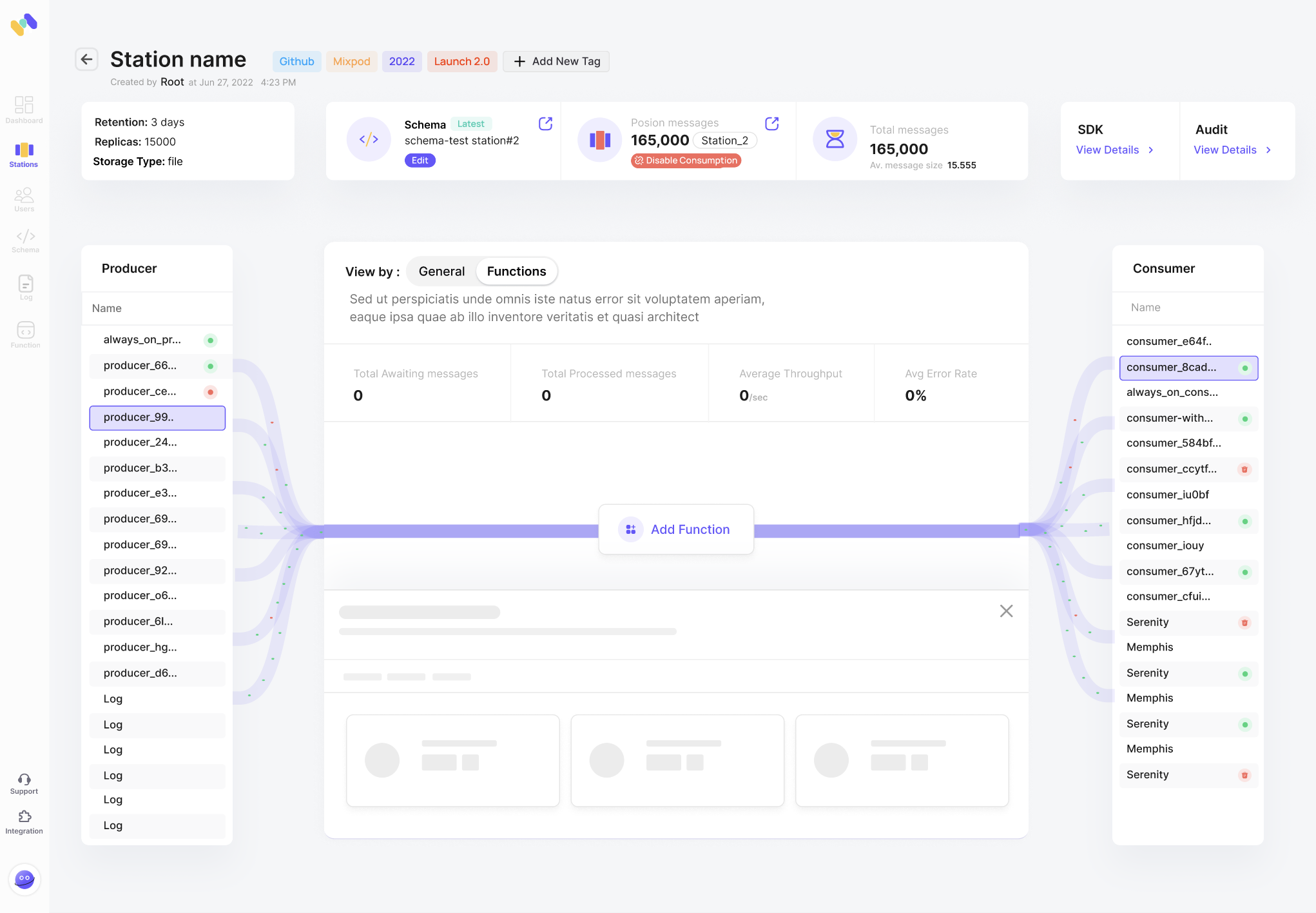

A scalable event-driven architecture will comprise three key components:

- Producer: components that publish or produce events. These can be frontend services that take in user input, edge devices like IoT systems, or other types of applications.

- Broker: components that take in events from producers and deliver them to consumers. Examples include Kafka, Memphis.dev, or AWS SQS.

- Consumer: components that listen to events and act on them.

It’s important also to note that some components may be a producer for one workflow while being a consumer for another. For example, if we look at a credit card processing service, it could be a consumer for events that involve credit cards, such as new purchases or updating credit card information. At the same time, this service may be a producer for downstream services that record purchase history or detect fraudulent activity.

Common Patterns

Since EDA is a broad architectural pattern, it can be applied in many ways. Some common patterns include:

- Point-to-point messaging: For applications that need a simple one-to-one communication channel, a point-to-point messaging pattern may be used with a simple queue. Events are sent to a queue (messaging channels) and buffered for consumers.

- Pub/sub: If multiple consumers need to listen to the same events, pub/sub style messaging may be used. In this scenario, the producer generates events on a topic that consumers can subscribe to. This is useful for scenarios where events need to be broadcast (e.g. replication) or different business logic must be applied to the same event.

- Communication models: Different use cases dictate how communication should be coordinated. In some cases, it must be orchestrated via a centralized service if logic involves some distinct steps with dependencies. In other cases, it can be choreographed where producers can generate events without worrying about downstream dependencies as long as the events adhere to a predetermined schema or format.

EDA Use Cases

Event-driven architecture became much more popular with the rise of cloud-native applications and microservices. We are not always aware of it, but if we take Uber, Doordash, Netflix, Lyft, Instacart, and many more, each one of them is completely based on an event-driven, async architecture.

Another key use case is data processing of events that require massive parallelization and elasticity to changes.

Let’s talk about Serverless Functions

Serverless functions are a subset of the serverless computing model, where a third party (typically a cloud or service provider) or some orchestration engine manages the infrastructure on behalf of the users and only charges on a per-use basis. In particular, serverless functions or Function-as-a-Service (FaaS) allow users to write small functions as opposed to full-fledged services with server logic and abstract away the typical “server” functionality such as HTTP listeners, scaling, and monitoring. To developers, serverless functions can simplify their workflow significantly as they can focus on the business logic and allow the service provider to bootstrap the infrastructure and server functionalities.

Serverless functions are usually triggered by external events. This can be a HTTP call or events on a queue or a pub/sub like messaging system. Serverless functions are generally stateless and designed to handle individual events. When there are multiple calls, the service provider will automatically scale up functions as needed unless parallelism is limited to one by the user. While different implementations of serverless functions have varying execution limits, in general, serverless functions are meant to be short-lived and should not be used for long-running jobs.

How about combining EDA and Serverless Functions?

As you probably noticed, since serverless functions are triggered by events, it makes for a great pairing with event-driven architectures. This is especially true for stateless services that can be short-lived. A lot of microservices probably fall under this bucket unless it is responsible for batch processing or some heavy analytics that push the execution limit.

The benefit of utilizing serverless functions with event-driven architecture is reduced overhead of managing the underlying infrastructure and freeing up developer’s time to focus on business logic that drives business value. Also, since service providers only charge per use, it can be a cost-efficient alternative to running a self-hosted infrastructure either on servers, VMs, or Containers.

Sounds like the perfect match, right? Why isn’t it everywhere?

While AWS is doing its best to push us to use lambda, Cloudflare invests hundreds of millions of dollars to convince developers to use its serverless framework, gcp, and others, it still feels “harder” than building a traditional service in the context of EDA or data processing.

Among the reasons are:

- Lack of observability. What went in and what went out?

- Debug is still hard. When dealing with future given data, great debugging experience is a must, as the slightest change in its structure will break the function.

- Retry mechanism.

- Convert a batch of messages into a processing batch.

The idea of combining both technologies is very interesting. Ultimately can save a great number of dev hours, efforts, and add abilities that are extremely hard to develop with traditional methodologies, but the mentioned reasons are definitely a major blocker.

I will end part 1 with a simple, open question/teaser –

Have you ever used Zapier?

Stay tuned for part 2 and get one step closer to learning more about the new way to do stream processing.