Memphis.dev Cloud Performance and Load testing

Contents

Contents

Introduction

This article presents the most recent Memphis.dev Cloud, multi-region benchmark tests conducted in December 2023, explores how to carry out performance testing, detailing hands-on methods you can apply yourself, and provides recent benchmark data for your reference.

The benchmark tool we used can be found here.

The objective of this performance testing is to:

- Measure the volume of messages Memphis.dev can process in a given time (Throughput) and what this looks like across different message sizes (128 Bytes, 512 Bytes, 1 KB and 5 KB).

- Measure the total time it takes a message to move from a producer to a consumer in Memphis.dev (Latency). Again we will test this with different message sizes (128 Bytes, 512 Bytes, 1 KB and 5 KB).

Before moving forward, it’s important to understand that the configurations of the producer, consumer, and broker used in our tests may differ from what you require. Our objective isn’t to mimic a real-world setup since every scenario demands a distinct configuration.

Throughput

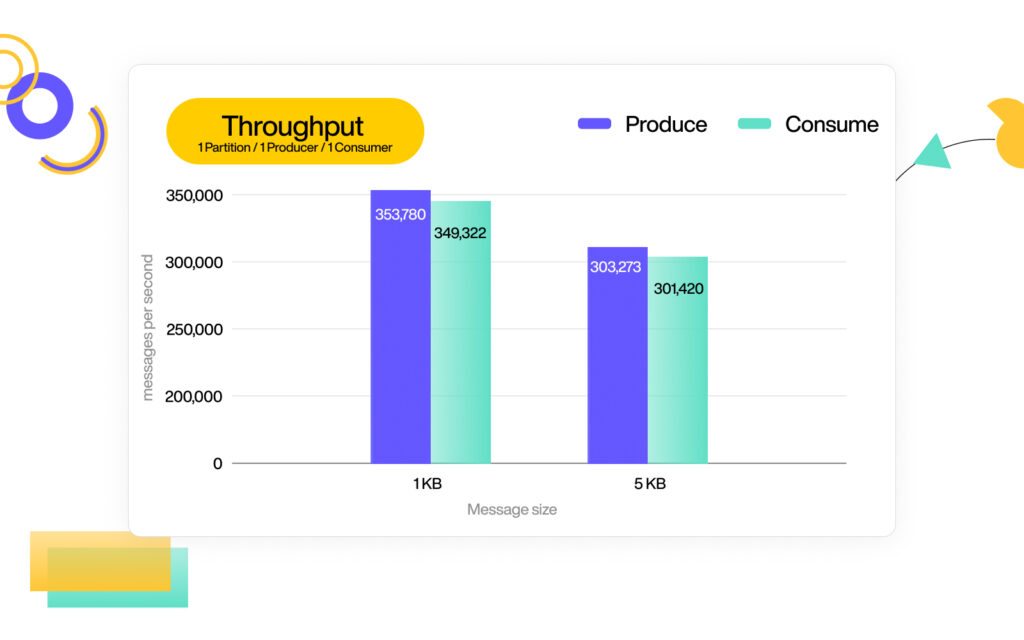

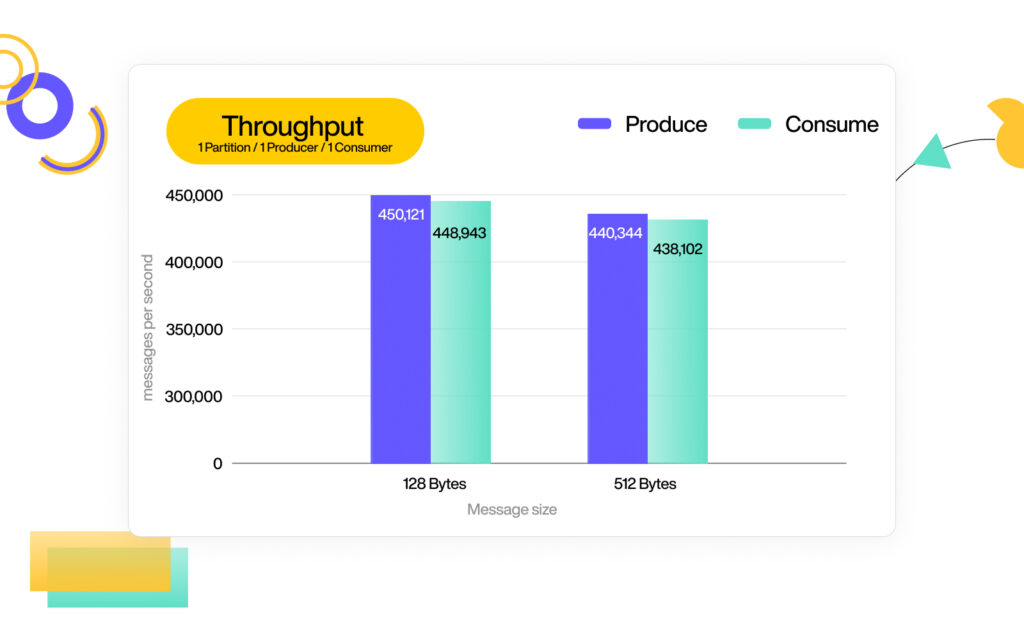

As previously mentioned, our throughput test involved messages of various sizes: 128 Bytes, 512 Bytes, 1 KB, and 5 KB as Memphis is great for both very small message sizes as well as larger.

For each of these message sizes, we conducted tests with only 1 producer, 1 consumer, and 1 partition. Memphis scales effectively in both horizontal and vertical dimensions, supporting up to 4096 partitions per station (equivalent to a topic). The results of our experiment are depicted in the charts below.

The above charts illustrates Memphis’ high performance, reaching over 450,000 messages per second with fairly small, 128-byte messages. It also shows Memphis’ maintaining strong performance at over 440,000 messages per second with medium-sized, 512-byte messages.

Latency

The table below provides a detailed view of Memphis’ latency distribution across various message sizes. Since latency data typically deviates from a normal distribution, presenting this information in terms of percentiles offers a more comprehensive understanding. This approach helps in accurately capturing the range and behavior of latency across different scenarios, giving a clearer insight into the system’s performance under varying conditions.

| 128 Bytes | 512 Bytes | 1024 Bytes | 5120 Bytes | |

| Minimum (ms) | 0.6756 | 0.6848 | 0.6912 | 0.7544 |

| 75th percentile (ms) | 0.8445ms | 0.856ms | 0.864ms | 0.943ms |

| 95th percentile (ms) | 0.8745ms | 0.886ms | 0.894ms | 0.973ms |

| 99th percentile (ms) | 0.9122ms | 0.8937ms | 0.9017ms | 0.9807ms |

While the table above provides latency data ranging from the minimum to the 99th percentile, our analysis will focus on the 50th percentile or median to evaluate Memphis’ latency performance.

The median is an equitable metric for assessment as it signifies the central point of a distribution. In other words, if the median is, for example, 7.5, it indicates that a substantial portion of the values in the distribution are clustered around this point, with many values being just above or below 7.5. This approach offers a balanced view of the overall latency behavior.

| 128 Bytes | 512 Bytes | 1024 Bytes | 5120 Bytes | |

| Median (ms) | 0.7136 | 0.7848 | 0.7912 | 0.8544 |

Benchmark methodology and tooling

This part of the document will detail the server setup implemented for the benchmark tests and explain the methodology used to obtain the throughput and latency figures presented.

To showcase the capabilities of Memphis.dev Cloud’s effortless global reach and serverless experience, we established a Memphis account in the EU region, specifically in AWS eu-central-1, spanning three Availability Zones (AZs). We then conducted benchmark tests from three distinct geographical locations: us-east-2, sa-east-1, and ap-northeast-1.

For the benchmark tests, we utilized EC2 instances of the t2.medium type with AMI, equipped with 2 vCPUs, 4GB Memory, and offering low to moderate network performance.

Throughput and Latency

To execute the benchmarks, we ran the load generator on each of the EC2 instances.

The main role of the load generator is to initiate multi-threaded producers and consumers. It employs these producers and consumers to create a large volume of messages, which are then published to the broker and subsequently consumed.

The commands we used for the throughput:

mem bench producer --host aws-eu-central-1.cloud.memphis.dev --user **** --password '*****' --account-id ****** --concurrency 4 --message-size 128 --count 500000

mem bench producer --host aws-eu-central-1.cloud.memphis.dev --user **** --password '*****' --account-id ****** --concurrency 4 --message-size 512 --count 500000

mem bench producer --host aws-eu-central-1.cloud.memphis.dev --user **** --password '*****' --account-id ****** --concurrency 4 --message-size 1024 --count 500000

mem bench producer --host aws-eu-central-1.cloud.memphis.dev --user **** --password '*****' --account-id ****** --concurrency 4 --message-size 5120 --count 500000

The commands we used for the latency:

mem bench consumer --host aws-eu-central-1.cloud.memphis.dev --user **** --password '*****' --account-id ****** --batch-size 500 --concurrency 4 --message-size 128 --count 500000

mem bench consumer --host aws-eu-central-1.cloud.memphis.dev --user **** --password '*****' --account-id ****** --batch-size 500 --concurrency 4 --message-size 512 --count 500000

mem bench consumer --host aws-eu-central-1.cloud.memphis.dev --user **** --password '*****' --account-id ****** --batch-size 500 --concurrency 4 --message-size 1024 --count 500000

mem bench consumer --host aws-eu-central-1.cloud.memphis.dev --user **** --password '*****' --account-id ****** --batch-size 500 --concurrency 4 --message-size 5120 --count 500000

Wrapping up

This concise article has showcased the consistent and stable performance of Memphis.dev Cloud when handling messages of different sizes, read and written by clients across three distinct regions. The results highlight the linear performance and low latency of the system, achieved without the need for clients to undertake any networking or infrastructure efforts to attain global access and low latency.

Ready to spin up your own Memphis account? Create a free Memphis.dev Cloud acount.

If you prefer the self-hosted version – Memphis is open-source!